OpenAI, in case you have been sleeping under a rock in the past four years, is an Artificial Intelligence research lab founded by Elon Musk and other tech titans, like Peter Thiel, Reid Hoffman, Sam Altman, and Jessica Livingston. It started as a non-profit organization in late 2015, but later switched to being for-profit and secured a whopping $1 billion investment from Microsoft. Along with their impressively deep pockets, they have an equally lofty mission statement - “to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity”. That is to say, they don’t only recognize the imminence of Artificial General Intelligence, they also feel an exigency to become the first ones to achieve it, so they can make sure it is not used to the detriment of humankind. After demonstrating some impressive but seemingly non-disruptive AI experiments, like an AI debate game and a robot hand that can solve the rubik's cube, they seemed to have caught their stride with an ongoing series of hugely impressive text generation AI models, called GPT (which stands for Generative Pre-trained Transformer). The most recent one, GPT3, was published in June 2020, and demonstrated amazing abilities to perform a wide variety of tasks with very few training examples. GPT3 was highly anticipated since the delay of its predecessor’s (GPT2) release by OpenAI, due to fears of misuses like automatic generation of fake news on social media.

Artificial Intelligence represents a wide area of research, focused on creating software that mimic abilities that are considered human-like or intelligent, such as reasoning, understanding language, or beating a human chess master. With that, in recent years, Artificial Intelligence (or AI in short) has become synonymous with one specific computer science technique called Machine Learning. Instead of hard-coding a set of instructions that are required to perform a task, Machine Learning algorithms are designed to achieve their tasks by implementing two phases: training and inference. The training software takes a big list of data points as an input, and uses it to generate the inference machine by iteratively optimizing inference parameters with a pre-programmed “loss function”. To give you some intuition of how it works, let’s take the example of image classification. You can think of an image classifier’s loss function like a judge that quantifies how wrong a guess for what’s in the image was. The image classifier itself is a classification function that uses some parameters to guess what’s in an image. To train this function, the algorithm starts with a random set of parameters and a list of pictures that a human already tagged for what’s in them. In every iteration, the random parameters of the classification function are slightly modified, to try and get the loss function “judge” to better score its guesses. Once the optimal parameters are found, they can be used for image classification in subsequent inference requests. GPT3 is therefore a type of machine learning algorithm.

Diving a little bit deeper, GPT3 is trained using large datasets of unlabeled texts to create a text generator that “infers” the correct sentence that best matches an input text. This description sounds very generic because it is designed to remain non-specific. GPT3 uses a machine learning feature called Transfer Learning to turn the non-specific knowledge it had learnt into a specific text generator in an additional training step that learns the pattern in another, much smaller, set of samples. The model itself is absolutely humongous. It is by far the largest language model ever created, with around 175 billion parameters that were trained on the largest text dataset - more than 8 million documents including every Wikipedia article in existence and other extremely large textual sources. It’s sheer size means that the cost of the compute power that’s required to train it is estimated by some to exceed $12 million. There are two main reasons for the huge waves GPT3 is making in the industry. First, it is the first ever model to demonstrate an amazing capability to learn seemingly unrelated language tasks, building on top of its massive knowledge to effectively perform tasks like translation, writing articles, and even some simple arithmetic computations. Second, and perhaps even more important, it has shown an exponential improvement over GPT2, with only a linear increase of model size, which represents the only material difference between the two. Put simply, this means that any task it can somewhat do today, can be improved to above human-level by only increasing the size of the model. This is something that was never thought possible before, and is hypothesized to be the path to that goal of achieving artificial general intelligence.

Excel magic using GPT3

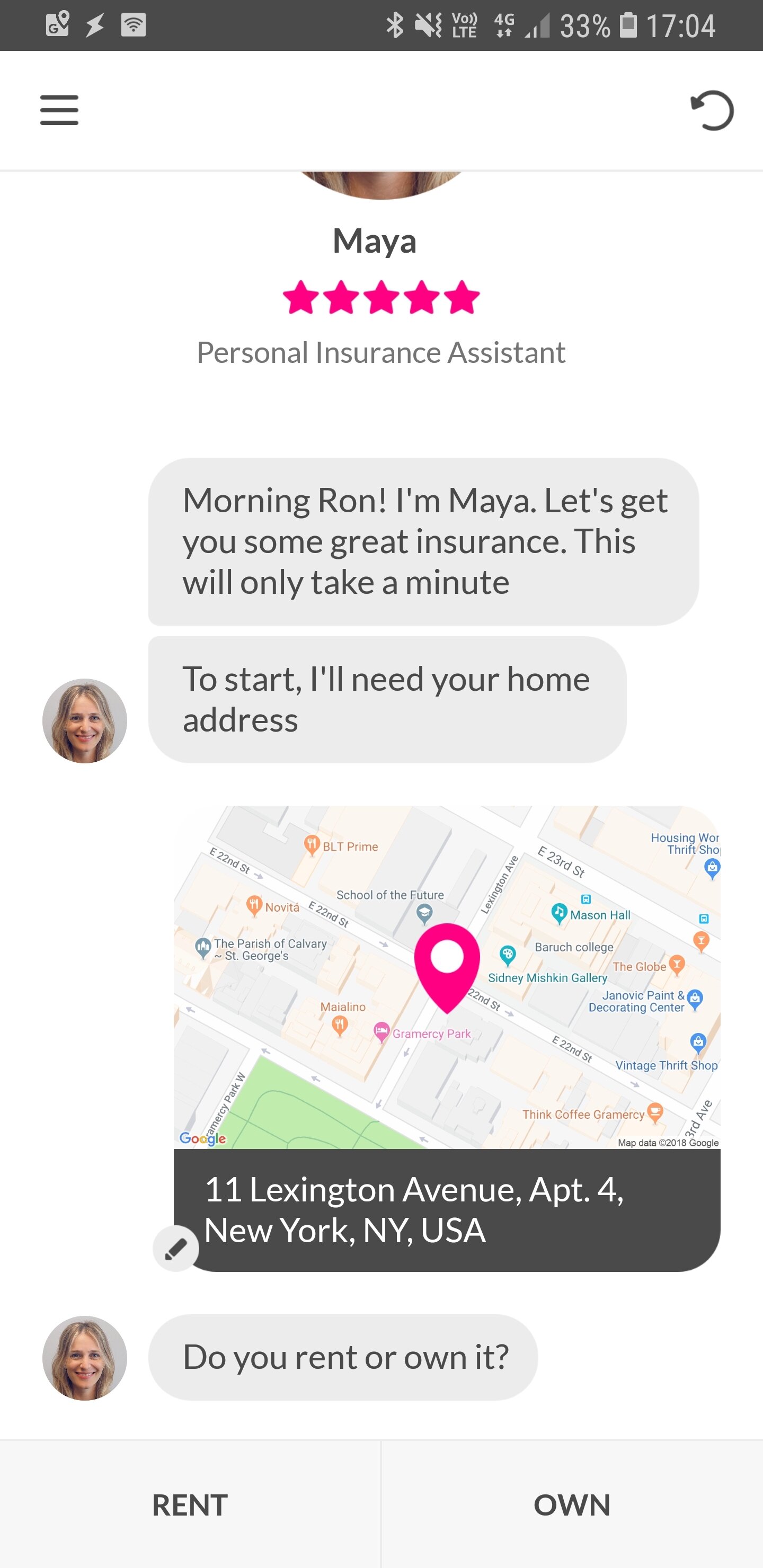

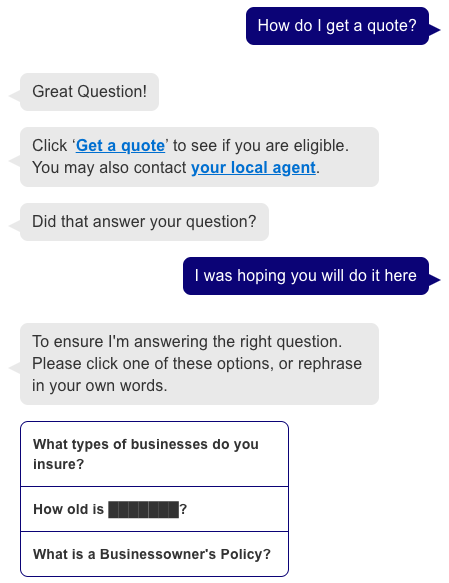

The multitude of amazingly impressive natural language demonstrations makes me wonder - would GPT3 change the natural language conversational AI landscape? My opinion is that while the current state of GPT3 is not in itself a tectonic shift in conversational AI, it certainly shows that in the future there might be one algorithm to rule them all. There are a few reasons for it not being a tectonic shift just yet. It has shown very promising question answering abilities, but no contextual conversations yet. Even if there was a way to train it with full conversations such that it would predict the next turn to a contextual conversation, it would still be highly black-boxed. That means that there wouldn’t be a way to pre-approve the verbiage of answers it would provide, making it impractical for the enterprises to make use of it. Furthermore, customizing the conversation flow would only be possible by going into the samples and editing them to match the desirable outcome. That is, again, impractical for any real use case. With all that said, it is important to understand that OpenAI hasn’t mass-released the API that will let developers use their pre-trained model, so it’s hard to know for sure how it will behave in real-life applications.